Published On Dec 28, 2023

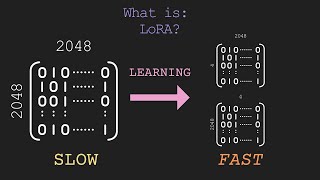

QLoRA is the first approach that allows the TRAINING of Large Language Models (LLMs) on a single GPU. It does this by using three contributions namely: 1. a novel NormalFloat data type, 2. Double Quantization, 3. Paged Optimizers.

In this video, we explain the idea behind all three approaches and explain how they are put together to arrive at QLoRA.

⌚️ ⌚️ ⌚️ TIMESTAMPS ⌚️ ⌚️ ⌚️

0:00 - QLoRA

1:34 - Quantization

3:10 - Problem with Quantization

3:50 - Blockwise Quantization

5:42 - Normal Float

6:37 - Double Quantization

7:25 - Paged Optimizers

8:45 - QLoRA Finetuning

9:30 - LoRA vs QLoRA

10:28 - Results

🛠 🛠 🛠 MY SOFTWARE TOOLS 🛠 🛠 🛠

✍️ Notion - https://affiliate.notion.so/aibites-yt

✍️ Notion AI - https://affiliate.notion.so/ys9rqzv2vdd8

📹 OBS Studio for video editing - https://obsproject.com

📼 Manim for some animations - https://www.manim.community

📚 📚 📚 BOOKS I HAVE READ, REFER AND RECOMMEND 📚 📚 📚

📖 Deep Learning by Ian Goodfellow - https://amzn.to/3Wnyixv

📙 Pattern Recognition and Machine Learning by Christopher M. Bishop - https://amzn.to/3ZVnQQA

📗 Machine Learning: A Probabilistic Perspective by Kevin Murphy - https://amzn.to/3kAqThb

📘 Multiple View Geometry in Computer Vision by R Hartley and A Zisserman - https://amzn.to/3XKVOWi

MY KEY LINKS

YouTube: / @aibites

Twitter: / ai_bites

Patreon: / ai_bites

Github: https://github.com/ai-bites

WHO AM I?

I am a Machine Learning Researcher/Practitioner who has seen the grind of academia and start-ups equally. I started my career as a software engineer 15 years ago. Because of my love for Mathematics (coupled with a glimmer of luck), I graduated with a Master's in Computer Vision and Robotics in 2016 when the now happening AI revolution just started. Life has changed for the better ever since.

#machinelearning #deeplearning #aibites